Validating Material Composition Data Using SHACL With SPARQL-Based Constraints With Aggregates

This is the third in a series of posts about using SHACL to validate material composition data for semiconductor products (microchips). This results from a recent project we undertook for Nexperia. In the first post we looked at the basic data model for material composition and how basic SHACL vocabulary can be used to describe the constraints. In the second post we looked at how SPARQL-based constraints can be used to implement more complex rules based on a SPARQL SELECT query. In this post we will continue to look at SPARQL-based constraints and how aggregates can be used as part of validation rules.

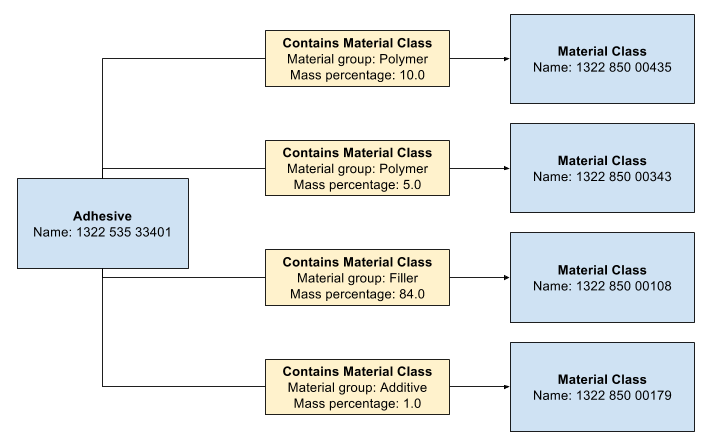

For each material we have the composition of the material in terms of the mass percentage of the substances it contains.

For example the adhesive 1322 535 33401:

The same graph expressed in RDF:

<132253533401> a plm:Adhesive ;

plm:containsMaterialClass <132285000108>, <132285000179>, <132285000343>, <132285000435> ;

plm:name "1322 535 33401" ;

plm:qualifiedRelation [

a plm:ContainsMaterialClassRelation ;

plm:massPercentage 10.0 ;

plm:materialGroup "Polymer" ;

plm:target <132285000435>

] ;

plm:qualifiedRelation [

a plm:ContainsMaterialClassRelation ;

plm:massPercentage 5.0 ;

plm:materialGroup "Polymer" ;

plm:target <132285000343>

] ;

plm:qualifiedRelation [

a plm:ContainsMaterialClassRelation ;

plm:massPercentage 84.0 ;

plm:materialGroup "Filler" ;

plm:target <132285000108>

] ;

plm:qualifiedRelation [

a plm:ContainsMaterialClassRelation ;

plm:massPercentage 1.0 ;

plm:materialGroup "Additive" ;

plm:target <132285000179>

] .

In this case we can see the mass percentages sum to 100% as one would expect:

10.0 + 5.0 + 84.0 + 1.0 = 100.0

However, for other materials we observe this is not the case and we would like to define a constraint to check for this. This could be due to a typo when entering data, or because some materials are entered as having some small ‘trace’ percentage of a substance, whereby the total is not exactly 100%.

As with the previous post, we first begin by defining a SPARQL query that implements the logic for the check.

In this case, we want to aggregate per material and calculate the sum of plm:massPercentage on the related plm:ContainsMaterialClassRelation via the plm:qualifiedRelation property.

A query that implements this logic is as follows:

PREFIX plm: <http://example.com/def/plm/>

SELECT ?material (sum(?massPercentage) as ?sumMassPercentage) {

?material plm:qualifiedRelation/plm:massPercentage ?massPercentage .

}

GROUP BY ?material

Running this query over the example data yields these results:

---------------------------------------------------------

| material | sumMassPercentage |

=========================================================

| <http://example.com/032226800047> | 100.00 |

| <http://example.com/132295500317> | 100.0 |

| <http://example.com/132299586663> | 100.00 |

| <http://example.com/132253533401> | 100.0 |

| <http://example.com/331214892031> | 100.1 |

| <http://example.com/340000130609> | |

| <http://example.com/132299586251> | 100.00 |

| <http://example.com/344000000687> | 100.00 |

| <http://example.com/331206306701> | 100.00 |

| <http://example.com/340007000868> | 100.0 |

---------------------------------------------------------

We can see that:

<http://example.com/331214892031>has total mass percentage of 100.1% which is suspect<http://example.com/340000130609>has missing sum (due to incorrect datatype on the value which is already validated in our shape file).

There are various ways we can write this SPARQL constraint in SHACL, but using a property shape with a path seems the best fit:

:shape1 a sh:NodeShape ;

sh:targetSubjectsOf plm:containsMaterialClass ;

sh:property [

sh:path ( plm:qualifiedRelation plm:massPercentage ) ;

sh:severity sh:Warning ;

sh:sparql [

a sh:SPARQLConstraint ;

sh:message "Mass percentage of contained material classes should sum to 100%" ;

sh:prefixes plm: ;

sh:select """

SELECT $this (sum(?massPercentage) as ?value) {

$this $PATH ?massPercentage .

}

GROUP BY $this

HAVING (sum(?massPercentage) != 100)

"""

]

] .

Here we have a sh:NodeShape that targets all resources that are subject of plm:containsMaterialClass property, which is logically any material

(i.e. the rdfs:domain of the property is plm:Material).

This saves having to enumerate all the different classes of materials or to materialize the inferred class memberships in the data.

Next we have defined the sh:property on this with the SHACL Property path ( plm:qualifiedRelation plm:massPercentage ) which is equivalent to

the SPARQL Property path plm:qualifiedRelation/plm:massPercentage from our query.

In the sh:sparql part, we define the SPARQL query where the $PATH variable will be substituted with the SPARQL Property path at runtime.

As we are using SPARQL aggregates to calculate the total mass percentage of the susbstances in a material, we use the HAVING keyword to operate

over the grouped solution set (in the same way that FILTER operates over un-grouped ones) to only return results that are not equal to 100.

Recall, from last post in the series, the SPARQL query must be written such that it gives results for things that do not match the constraint

Note we also define the severity of this constraint as sh:Warning.

This is because we do not want the data processing pipeline to fail, but the warning should be logged and reported to the responsible person.

The extended shape file is available here.

If we use this shape file to validate our data, we see the additional validation result:

[ a sh:ValidationResult ;

sh:focusNode <http://example.com/331214892031> ;

sh:resultMessage "Mass percentage of contained material classes should sum to 100%" ;

sh:resultPath ( plm:qualifiedRelation plm:massPercentage ) ;

sh:resultSeverity sh:Warning ;

sh:sourceConstraint [] ;

sh:sourceConstraintComponent sh:SPARQLConstraintComponent ;

sh:sourceShape [] ;

sh:value 100.1

]

This tallies with the results from our standalone query.

This demonstrates that non-trivial rules involving calculated aggregate values can be implemented using SPARQL-based constraints in SHACL using the HAVING keyword to filter the grouped solution sets.

As we are using W3C standards, we can be sure we avoid vendor-specific solutions and thus lock-in.

In the next post in the series, we will look at using OWL and SHACL to implement inferencing rules for classification of materials.