In a recent post, Dean Allemang explained why he’s not excited about RDF-star.

In this post I want to expand on Dean’s post with more concrete examples to illustrate why I’m concerned about RDF-star.

Global triples and occurences of triples

In the flights example, Dean mentions that using RDF-star would encourage poor modeling practice.

So how exactly would that manifest if we choose to model multiple flights with multiple triples:

:NYC :connection :SFO .

:NYC :connection :SFO .

:NYC :connection :SFO .

First we note that “an RDF-star triple is an abstract entity whose identity is entirely defined by its subject, predicate, and object”

and “this unique triple (s, p, o) can be quoted as the subject or object of multiple other triples, but must be assumed to represent the same thing everywhere it occurs”.

So in the above example, we have the same RDF-star triple asserted three times.

This is already familiar for experienced RDF users as, when processing a graph with duplicate triples, those duplicates may be de-duplicated.

When quoting this triple in RDF-star, a novice user might assert additional information about the multiple flights as follows:

:NYC :connection :SFO .

<< :NYC :connection :SFO >>

:departureGate "10" ;

:departureTime "2023-04-22T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-22T06:00:00Z"^^xsd:dateTime .

:NYC :connection :SFO .

<< :NYC :connection :SFO >>

:departureGate "20" ;

:departureTime "2023-04-23T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-23T06:00:00Z"^^xsd:dateTime .

:NYC :connection :SFO .

<< :NYC :connection :SFO >>

:departureGate "30" ;

:departureTime "2023-04-24T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-24T06:00:00Z"^^xsd:dateTime .

Or the equivalent using annotation syntax:

:NYC :connection :SFO {|

:departureGate "10" ;

:departureTime "2023-04-22T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-22T06:00:00Z"^^xsd:dateTime .

|} .

:NYC :connection :SFO {|

:departureGate "20" ;

:departureTime "2023-04-23T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-23T06:00:00Z"^^xsd:dateTime .

|} .

:NYC :connection :SFO {|

:departureGate "30" ;

:departureTime "2023-04-24T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-24T06:00:00Z"^^xsd:dateTime .

|} .

However, running this through the Jena Riot command-line tool with formatted output, we immediately see a problem:

:NYC :connection :SFO .

<< :NYC :connection :SFO >>

:arrivalTime

"2023-04-24T06:00:00Z"^^xsd:dateTime ,

"2023-04-23T06:00:00Z"^^xsd:dateTime ,

"2023-04-22T06:00:00Z"^^xsd:dateTime ;

:departureGate

"30" ,

"20" ,

"10" ;

:departureTime

"2023-04-24T00:00:00Z"^^xsd:dateTime ,

"2023-04-23T00:00:00Z"^^xsd:dateTime ,

"2023-04-22T00:00:00Z"^^xsd:dateTime .

Because the quoted triple represents the same thing (resource) everywhere it occurs,

we have clashing/conflicting use of the resource identifier (being the quoted triple).

Thus, we end up with all the statements being about the same thing, not three different flights.

So it is impossible to know which departure gate relates to which departure time, not ideal if we don’t want to miss our flight!

Querying the graph using SPARQL-star query further illustrates the effect:

select *

where {

:NYC :connection :SFO {|

:departureGate ?gate ;

:departureTime ?departs ;

:arrivalTime ?arrives

|} .

}

Gives the results:

--------------------------------------------------------------------------------------

| gate | departs | arrives |

======================================================================================

| "10" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "10" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "20" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-24T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-22T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-23T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-24T06:00:00Z"^^xsd:dateTime |

| "30" | "2023-04-23T00:00:00Z"^^xsd:dateTime | "2023-04-22T06:00:00Z"^^xsd:dateTime |

--------------------------------------------------------------------------------------

We end up with the cross-product of the three values for each of :departureGate, :departureTime and :arrivalTime.

The RDF-star specification recognizes the distinction between global triples and specific asserted occurences of triples.

The latter requires additional nodes in the graph to represent the distinct occurences of the triple.

Following the approach presented in the specification:

:NYC :connection :SFO .

[] :occurenceOf << :NYC :connection :SFO >> ;

:departureGate "10" ;

:departureTime "2023-04-22T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-22T06:00:00Z"^^xsd:dateTime .

[] :occurenceOf << :NYC :connection :SFO >> ;

:departureGate "20" ;

:departureTime "2023-04-23T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-23T06:00:00Z"^^xsd:dateTime .

[] :occurenceOf << :NYC :connection :SFO >> ;

:departureGate "30" ;

:departureTime "2023-04-24T00:00:00Z"^^xsd:dateTime ;

:arrivalTime "2023-04-24T06:00:00Z"^^xsd:dateTime .

Though we end up so close to a ‘proper’ domain model that it’s a better proposition to improve the model to start with.

Other options might be to retain the context of the qualifying statements using named graphs, or add more recursive levels of reification.

Neither is particularly appealing.

Ultimately the advice on N-ary relations and reification in RDF

in the (draft) Working Group Note “Defining N-ary Relations on the Semantic Web” remains relevant, with some tweaks:

It may be natural to think of RDF reification RDF-star when representing n-ary relations.

We do not want to use the RDF reification vocabulary RDF-star to represent n-ary relations in general for the following reasons.

The RDF reification vocabulary RDF-star is designed to talk about statements—individuals that are instances of rdf:Statementrdf-star:Triple.

A statement is a subject, predicate, object triple and reification in RDF RDF-star is used to put assert additional information about this triple.

This information may include the source of the information in the triple, for example.

In n-ary relations, however, additional arguments in the relation do not usually characterize the statement but rather provide additional information about the relation instance itself.

Thus, it is more natural to talk about instances of a diagnosis relation or a purchase rather than about a statement.

In the use cases that we discussed in the note, the intent is to talk about instances of a relation, not about statements about such instances.

Qualification of triples

Even when using RDF-star to make statements about statements, we still need to carefully consider how we model those meta-statements.

A good example is if we want to model assertions and retractions of statements (who said what, and when).

Consider the following RDF-star triple:

<< _:a :name "Alice" >> :statedBy :bob.

Should we want to model when Bob stated this, what would be a good approach?

As shown above, we cannot make more statements about the triple << _:a :name "Alice" >> without potential clashes.

Instead, we need to talk about an occurence of the triple.

Given that rdfs:Literal is a subclass of rdfs:Resource, it follows that any literal is a resource.

Similarly a quoted tripe is itself a resource.

So, if we want to relate the quoted triple << _:a :name "Alice" >> with Bob and a timestamp, then we have an n-ary relation involving three resources.

As the intent is to talk about the instance of the relation, rather than the statement of the relation, we might introduce some ‘Assertion’ class:

[] a :Assertion ;

:occurenceOf << _:a :name "Alice" >> ;

:agent :bob ;

:atTime "2023-04-30T20:40:40"^^xsd:dateTime

Arguably this saves a couple of statements when compared to the “traditional” RDF reification vocabulary.

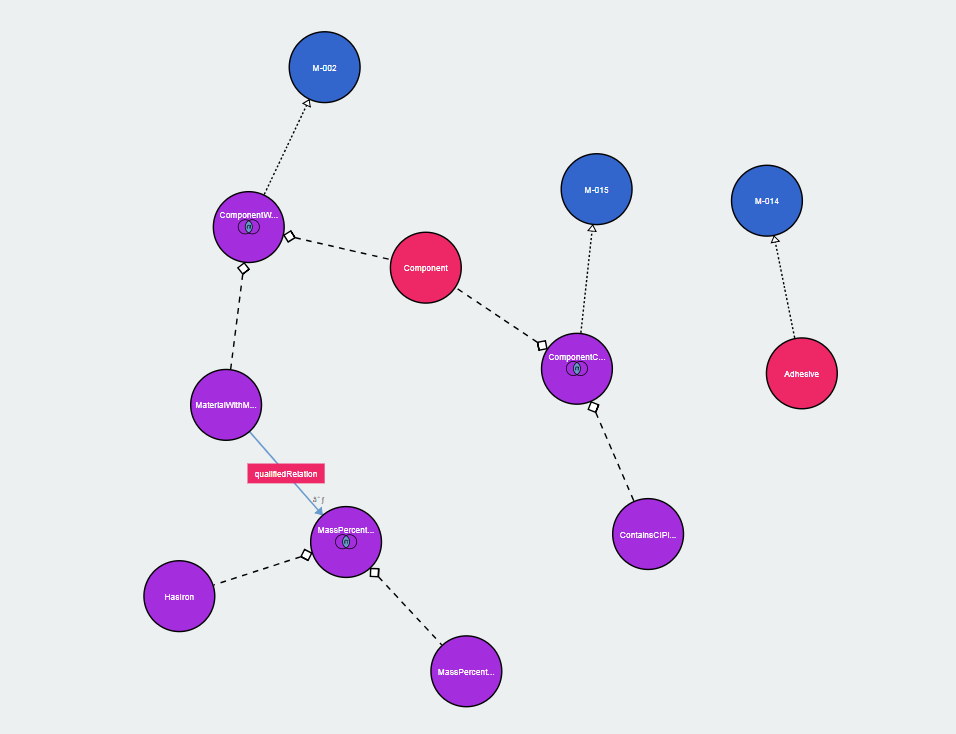

Interoperability with Property Graphs

Using RDF-star to provide interoperability with Property Graphs is mentioned in both the

RDF-star Working Group Charter and

RDF-star Use Cases and Requirements.

The Property Graphs and object-oriented databases that I’ve encountered use the edges to represent instances of relations, as opposed to some globally unique triple.

As in, there may be multiple instances of edges/relations with the same relation type between the same nodes/relations, but with different attributes and values on the edges.

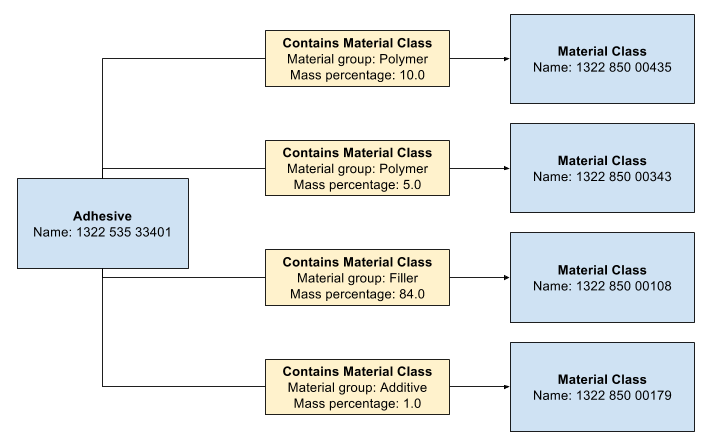

A natural representation of Property Graph data in RDF is therefore to introduce a qualified class per relation type.

Arguably RDF-star is not necessary for this, but if we wish to introduce ‘simple’ binary relations in addition to qualified relations, then using quoted triples would allow to relate those.

To illustrate this, representing a PG relation as follows:

<< :Alice :worksFor :ACME >>

:role :CEO ;

:since 2010 ;

:probability 0.8 ;

:source <http://example.com/news> .

Would introduce the issues described above if we were to scrape data about Alice’s employment details from multiple sources that gave differing accounts of the employment history.

To avoid this, we can represent the relation as the instance of some class:

[] a :EmployeeRole ;

:occurenceOf << :Alice :worksFor :ACME >> ;

:roleName :CEO ;

:since 2010 ;

:probability 0.8 ;

:source <http://example.com/news> .

Usage with PROV-O

Using quoted statements to relate instances of a relation to the unqualified triple could present an useful way to lookup qualifying information.

This pattern could also be useful when incrementally evolving a model where we only discover the need to reify certain relations as requirements emerge.

Rather than prospectively modeling all those classes up-front, we can start with a minimum-viable model and extend later as needed.

We can illustrate this using examples based on PROV-O.

PROV-O Qualified classes and properties provide elaborated information about binary relations asserted using Starting Point and Expanded properties.

Where we might start by talking about some activity using the starting-point properties:

:sortActivity a prov:Activity ;

prov:startedAtTime "2011-07-16T01:52:02Z"^^xsd:dateTime ;

prov:used :datasetA ;

prov:generated :datasetB .

Later we might figure out that we needed to express the role of :datasetA whereby we can retain the relation to the original triple:

:sortActivity a prov:Activity ;

prov:startedAtTime "2011-07-16T01:52:02Z"^^xsd:dateTime ;

prov:qualifiedUsage [

a prov:Usage ;

prov:entity :datasetA ; ## The entity used by the prov:Usage

prov:hadRole :inputToBeSorted ; ## the role of the entity in this prov:Usage

prov:specializationOf << :sortActivity prov:used :datasetA >> ;

];

prov:used :datasetA ; ## retain the original asserted statement for backwards compatibility

prov:generated :datasetB;

.

Conclusion

RDF-star and SPARQL-star add an extra level of complexity to standards that are already viewed as complex by many developers.

Knowing when and how to apply these extensions to best effect, and avoid shooting oneself in the foot later, is not obvious to novice and causal users.

Likely the pracitioners in the community need to build experience applying these extensions in the wild to figure out what works and what doesn’t.

Based on the examples presented above, I am not convinced that I will use RDF-star in my work.

Whilst there probably are cases where it is useful to make statements about statements, in over 10 years using RDF and SPARQL, I am yet to encounter them.

I am also unconvinced there are many real-world use cases where it is useful to make statements about some RDF-star notion of a globally unique triple.

Apart from trivial “toy” examples, I expect any real-world use cases will involve n-ary relations between a quoted statement and multiple other resources (IRIs and literals).

Certainly that should at least raise questions about the utility of quoted triples as subjects in statements.

Perhaps constraining their usage to only the object postion, in the same way as literals, would help avoid a rash of questionable modeling practices.

RDF-star seemingly seeks to solve a corner case of a corner case.

Given that, I find it curious that it has attracted so much attention in the community the past years.

Perhaps we should focus on efforts help onboard new users and drive long-term adoption:

- How do we promote good RDF modeling practices?

- How do we know when we talk about instances of relations versus statements?

- How do we know when we talk about a triple versus an occurrence of a triple?

Presenting RDF-star as the solution for interoperability with property graphs also appears to be a red herring.

Without involving some node in the graph that gives an identity to an instance of a relation, this is destined to end up with confused and dissatisfied users.

I hope the bright minds that are involed in the RDF-star Working Group can find a way to square the circle.

![]()